Artificial Intelligence (AI) models are increasingly critical in automating decision‑making across industries. While they offer efficiency and predictive power, AI systems are not immune to degradation over time.

Model drift is the gradual decline in AI performance due to changes in data distribution, environment, or user behavior.

Research shows that up to 91% of machine learning models experience performance degradation due to model drift if not actively monitored and maintained.

Understanding this silent risk is essential for companies relying on AI for long‑term operations. Proactive management of model drift ensures that AI systems maintain accuracy, relevance, and reliability.

This article explores the causes, impact, detection methods, and mitigation strategies of model drift, providing practical insights for mid‑market companies aiming for sustainable AI deployment.

Model drift occurs when an AI model’s predictions become less accurate over time due to changes in input data or business conditions.

Learn how AI design review for LLM security and compliance ensures robust model performance.

Mid-market companies face unique challenges when managing AI over time.

Explore AI risk assessment to identify and mitigate operational threats.

Detecting model drift requires continuous monitoring and analytical tools.

Performance Metrics Monitoring: Track metrics such as accuracy, F1 score, and AUC over time. Sudden drops indicate potential drift.

Data Distribution Analysis: Compare incoming data distributions against the training dataset. Tools like Kolmogorov-Smirnov tests or Jensen-Shannon divergence help quantify drift.

.webp)

Residual Analysis: Analyze model errors over time to identify patterns. Increasing residual variance signals drift.

Shadow Deployments: Run new models alongside live systems to compare outputs before retraining.

Model drift arises from multiple internal and external factors.

Understand securing the AI supply chain to maintain consistent and reliable data flow.

Preventing model drift involves a combination of monitoring, retraining, and governance practices.

Unaddressed model drift can lead to incorrect predictions and flawed decision-making.

Several advanced tools and techniques are available for drift detection.

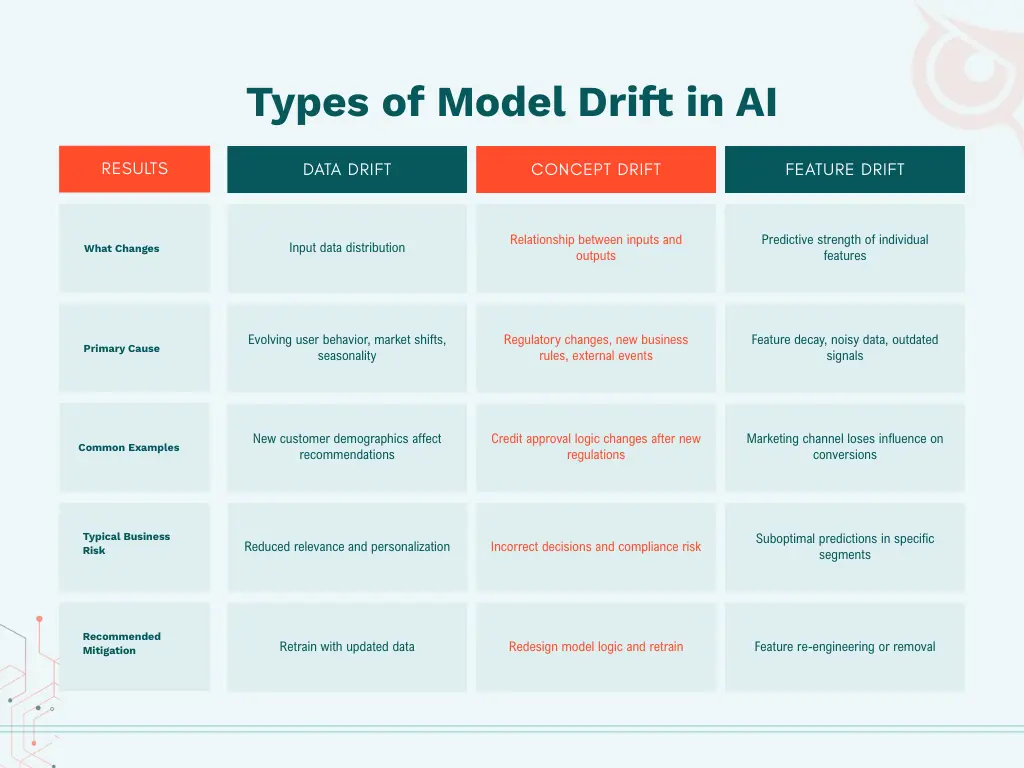

Model drift can occur in different forms, each requiring distinct detection and mitigation strategies.

Model drift is a silent but significant risk in long-term AI deployments. By understanding its causes, monitoring performance, and applying proactive retraining strategies, companies can safeguard AI reliability, ensure compliance, and protect business outcomes.

Implementing structured AI governance is critical for mid-market firms aiming to scale AI without compromising accuracy or customer trust.

Contact ioSENTRIX today to implement comprehensive AI governance, monitoring, and security solutions tailored to your business.

Models should be retrained whenever performance metrics show consistent decline, typically every 3–6 months depending on data volatility.

No, drift is inevitable, but continuous monitoring, retraining, and governance can minimize its impact.

Accuracy, precision, recall, F1 score, AUC, residual variance, and data distribution changes are primary indicators.

No, predictive models using dynamic datasets like consumer behavior or financial transactions are more prone than static models.

Automated monitoring tools, cloud-based AI platforms, and periodic audits allow efficient drift management without large teams.

.