Artificial Intelligence (AI) and are revolutionizing industries, but they also introduce unique and complex AI system security challenges.

Unlike traditional software, LLMs generate unpredictable outputs, adapt to diverse inputs, and often process sensitive data. This unpredictability makes them vulnerable to novel attack vectors that traditional security assessments may not fully address.

A Secure Architecture Review is a systematic evaluation of the design, infrastructure, and deployment of an AI or LLM system. It uncovers hidden attack surfaces and ensures that the system is protected against current and emerging threats.

For organizations adopting AI, especially generative AI, this review is not just beneficial, it’s essential for LLM threat mitigation and long-term resilience.

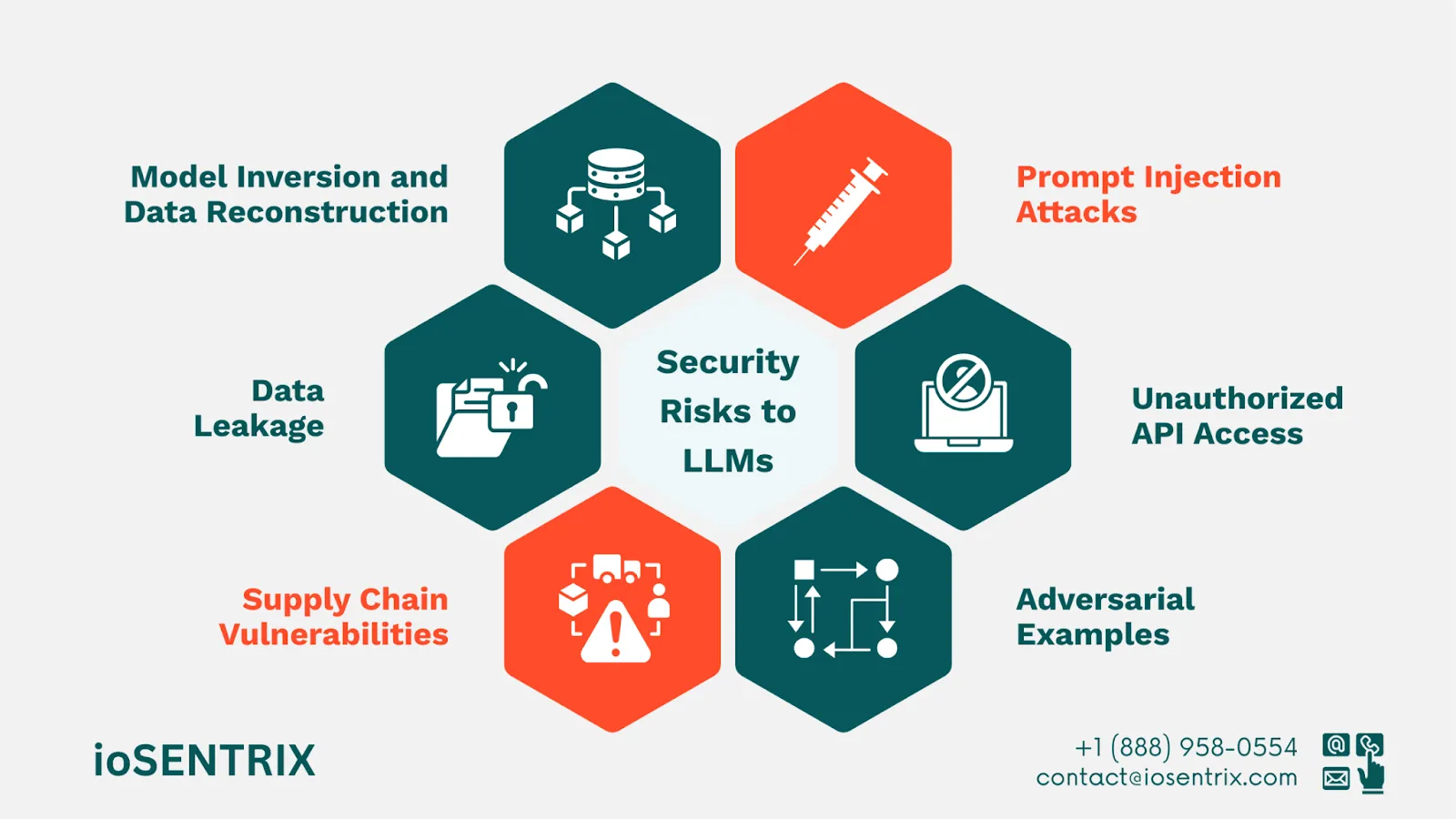

Recent studies and industry reports have identified several critical threats to LLM environments when secure architecture reviews and controls are not embedded into their design:

Prompt Injection Attacks: Malicious users can craft inputs that override system instructions or inject harmful commands. This makes prompt injection prevention a priority to protect sensitive data and stop unauthorized actions.

Model Inversion and Data Reconstruction: Attackers can reverse-engineer model outputs to recover training data, exposing confidential or proprietary information.

Data Leakage: Poor isolation of training data pipelines or inference APIs can unintentionally reveal sensitive information in generated outputs.

Adversarial Examples: Crafted inputs can manipulate model behavior, causing it to misclassify, hallucinate, or provide incorrect responses.

Supply Chain Vulnerabilities: Insecure third-party libraries, pre-trained models, or API integrations can become an entry point for attackers.

Unauthorized API Access: Weak authentication or access controls in inference endpoints can allow malicious users to query models in unintended ways.

Strong Encryption for Data at Rest and in Transit

A secure architecture review ensures that encryption protocols, like AES-256 for stored data and TLS 1.3/SSL for communications, are in place.

Advanced methods, such as homomorphic encryption and differential privacy, enhance AI system security by protecting data without compromising usability.

Continuous Monitoring and Anomaly Detection

Real-time monitoring helps with LLM threat mitigation by detecting unusual model behaviors or suspicious requests.

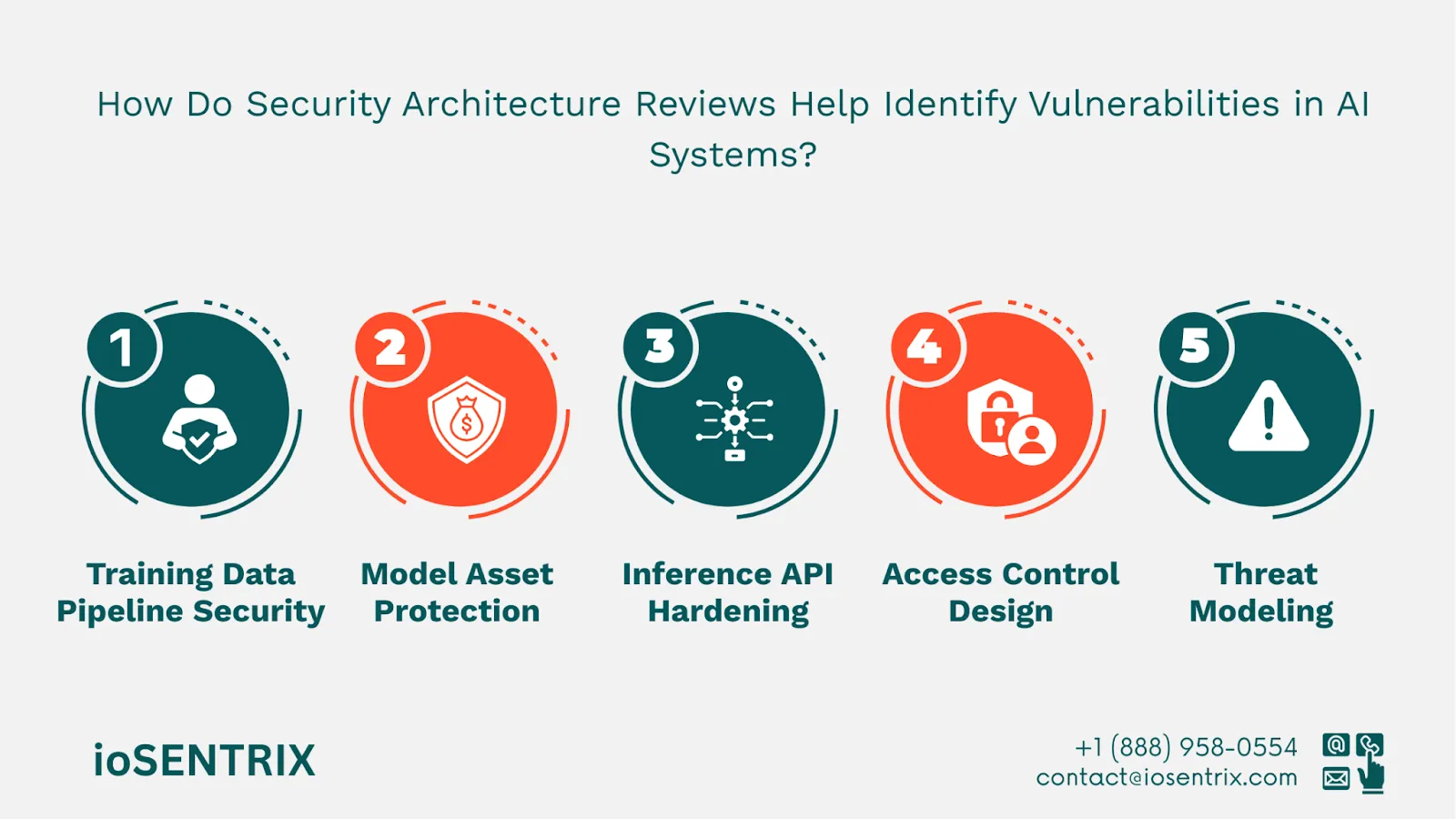

While penetration testing uncovers technical flaws, a Secure Architecture Review examines AI system security at the design level.

Experts evaluate:

Embedding ethics into AI governance ensures that security controls also address:

Documenting system behavior, decision paths, and training data provenance helps:

Transparency also reduces “black box” risks by enabling explainable AI (XAI), ensuring that stakeholders understand both the capabilities and limitations of the system.

Based on our experience at ioSENTRIX, here are essential guidelines for secure AI/LLM architecture:

We go beyond conventional assessments to deliver comprehensive AI security solutions. Our Application Security services include Architecture Reviews, Threat Modeling, Penetration Testing, and Code Reviews, ensuring that every component of your AI ecosystem is assessed for risk.

We specialize in:

The pace of AI innovation shows no signs of slowing—but neither do the threats. LLMs’ unpredictability and access to sensitive data make them powerful yet risky.

A Secure Architecture Review remains the most effective way to ensure AI system security. By embedding security into design, validating it through continuous testing, and reinforcing it with LLM threat mitigation strategies such as prompt injection prevention, organizations can fully harness the potential of AI while minimizing risks.

A Secure Architecture Review is a structured assessment of an AI or LLM system’s design, infrastructure, and deployment. It identifies hidden attack surfaces, such as insecure training data pipelines, model weights, and inference APIs, before they can be exploited, ensuring the system is resilient against emerging cyber threats.

Unlike conventional applications, LLMs generate unpredictable outputs and adapt to diverse inputs, making them susceptible to unique threats like prompt injection, model inversion, data leakage, and adversarial inputs. These risks require security reviews tailored specifically to AI systems rather than generic application assessments.

Strong encryption (e.g., AES-256, TLS 1.3) safeguards sensitive data at rest and in transit, while advanced techniques like homomorphic encryption and differential privacy further protect training datasets. Continuous monitoring with anomaly detection helps spot abnormal activity, preventing data leaks or malicious model manipulation.

Reviews should be conducted during initial development, before major updates, and periodically after deployment. Continuous testing, penetration tests, and red team exercises are recommended to keep pace with evolving AI threats and infrastructure changes.

Ethical oversight ensures that AI systems are not only technically secure but also responsibly deployed. It includes bias detection, prevention of harmful content generation, and transparency in decision-making processes. This builds user trust and supports regulatory compliance.