Large Language Models (LLMs) and AI systems are revolutionizing industries, powering applications from customer service bots to advanced data analytics. However, as these technologies proliferate, they introduce novel vulnerabilities that traditional security testing methods often overlook.

Penetration Testing (Pentesting) for AI and LLM systems focuses on identifying these unique risks to ensure comprehensive protection. This blog explores the critical role of Pentesting in securing AI systems and how ioSENTRIX leverages advanced techniques to protect your AI-powered assets.

Traditional pentesting focuses on finding vulnerabilities in code, networks, and infrastructure. However, AI systems introduce a new layer of complexity:

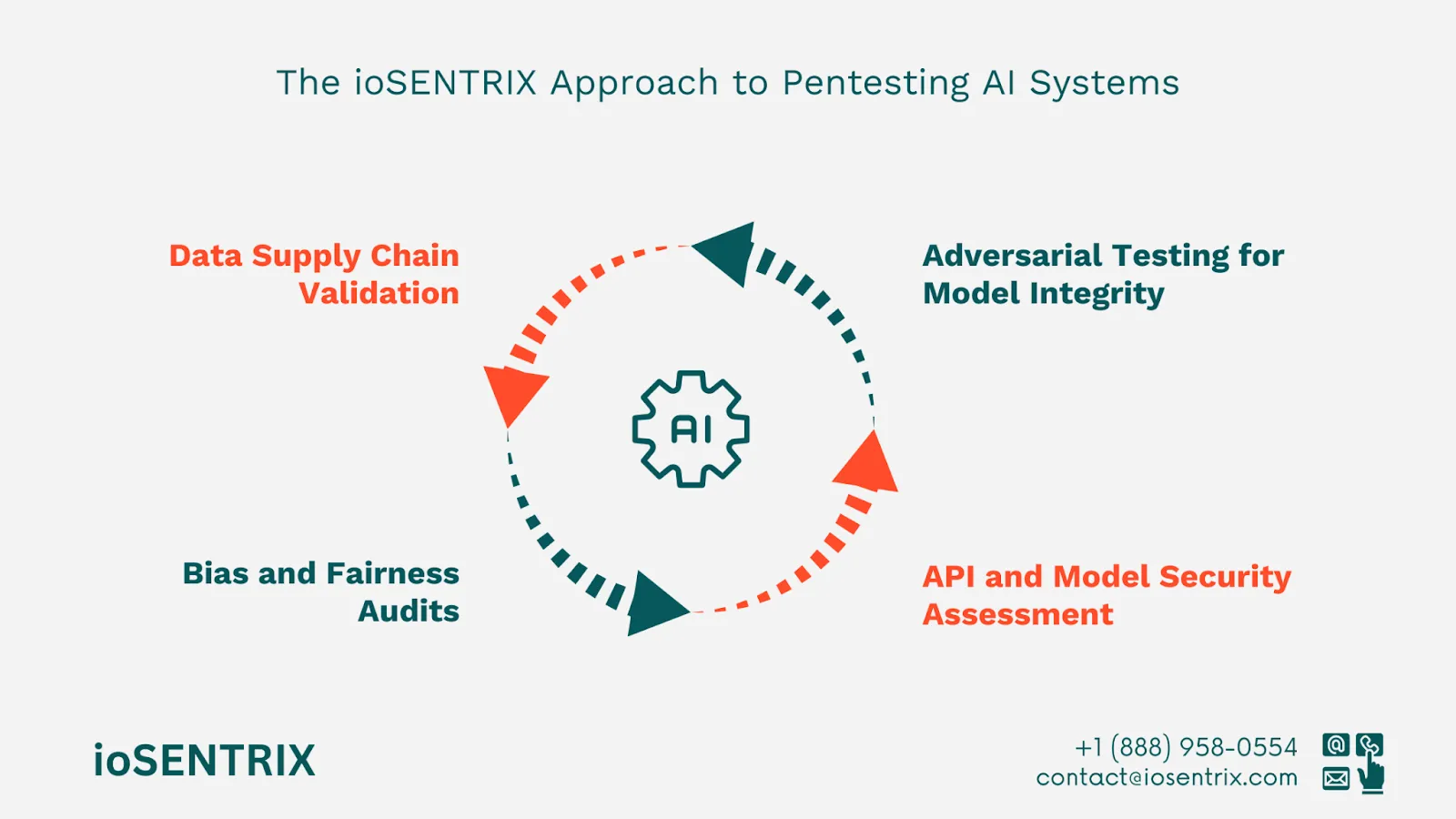

ioSENTRIX employs a specialized framework for Pentesting LLMs and AI systems, designed to uncover vulnerabilities across all components of your AI stack.

We simulate adversarial attacks to test the system’s resilience against malicious inputs.

Example: Testing a customer support chatbot to ensure it cannot be tricked into revealing sensitive company information.

Data integrity is critical for AI. We assess vulnerabilities in the data lifecycle:

Example: A financial AI application trained on poisoned data could offer misleading investment advice, causing financial harm.

AI systems often expose APIs, which can be a major attack vector.

Example: Ensuring that a healthcare AI’s API cannot be abused to infer sensitive patient data.

Bias can be exploited, leading to unintended harmful consequences. We test:

Example: Ensuring an AI-driven hiring tool does not unintentionally discriminate based on gender or ethnicity.

A leading e-commerce company partnered with ioSENTRIX to test its AI recommendation engine. Here’s how we helped:

Assess vulnerabilities in their AI system’s data pipeline, API, and model integrity.

The company significantly improved the robustness of its AI platform, enhancing both security and customer trust.

Pentesting provides several essential benefits for organizations deploying AI systems:

As AI and LLM systems become integral to modern business operations, their security must evolve to meet new challenges. ioSENTRIX’s specialized Pentesting services ensure your AI assets remain secure, resilient, and compliant.

Protect your AI systems today. Contact ioSENTRIX to schedule a comprehensive penetration test for your AI and LLM systems.