AI systems, especially those powered by Large Language Models (LLMs), are rapidly becoming integral to business operations. However, their complexity and unique vulnerabilities demand a new approach to security testing.

Ethical hacking, or red teaming, simulates real-world attacks to identify and mitigate risks in AI systems. In this blog, we’ll explore how ioSENTRIX adapts ethical hacking techniques for AI systems to ensure comprehensive security.

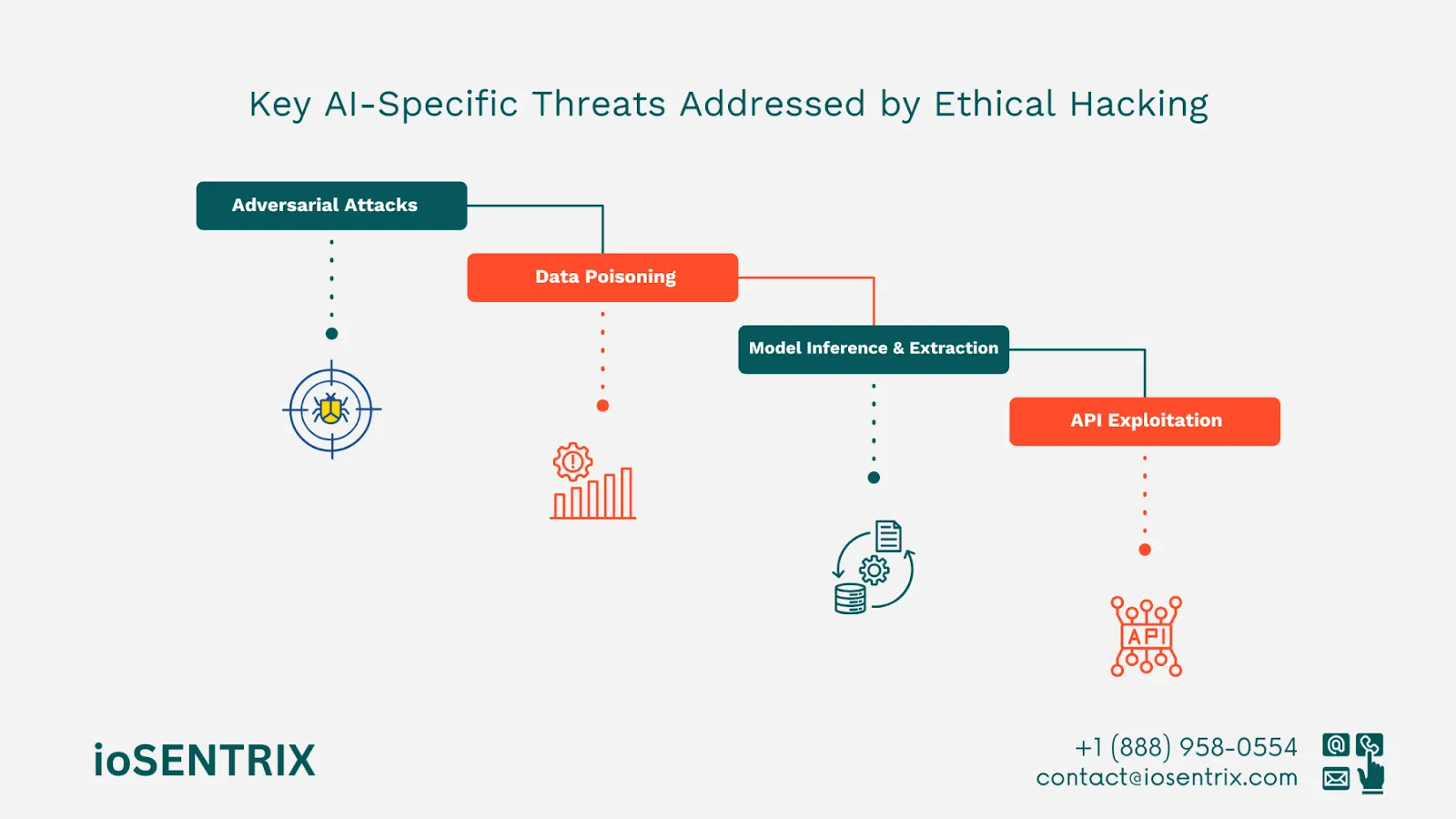

Attackers manipulate input data to exploit AI behavior.

Injecting malicious data into training datasets compromises model integrity.

Repeated queries allow attackers to infer sensitive data or replicate models.

APIs offer an interface for attackers to manipulate AI functionalities.

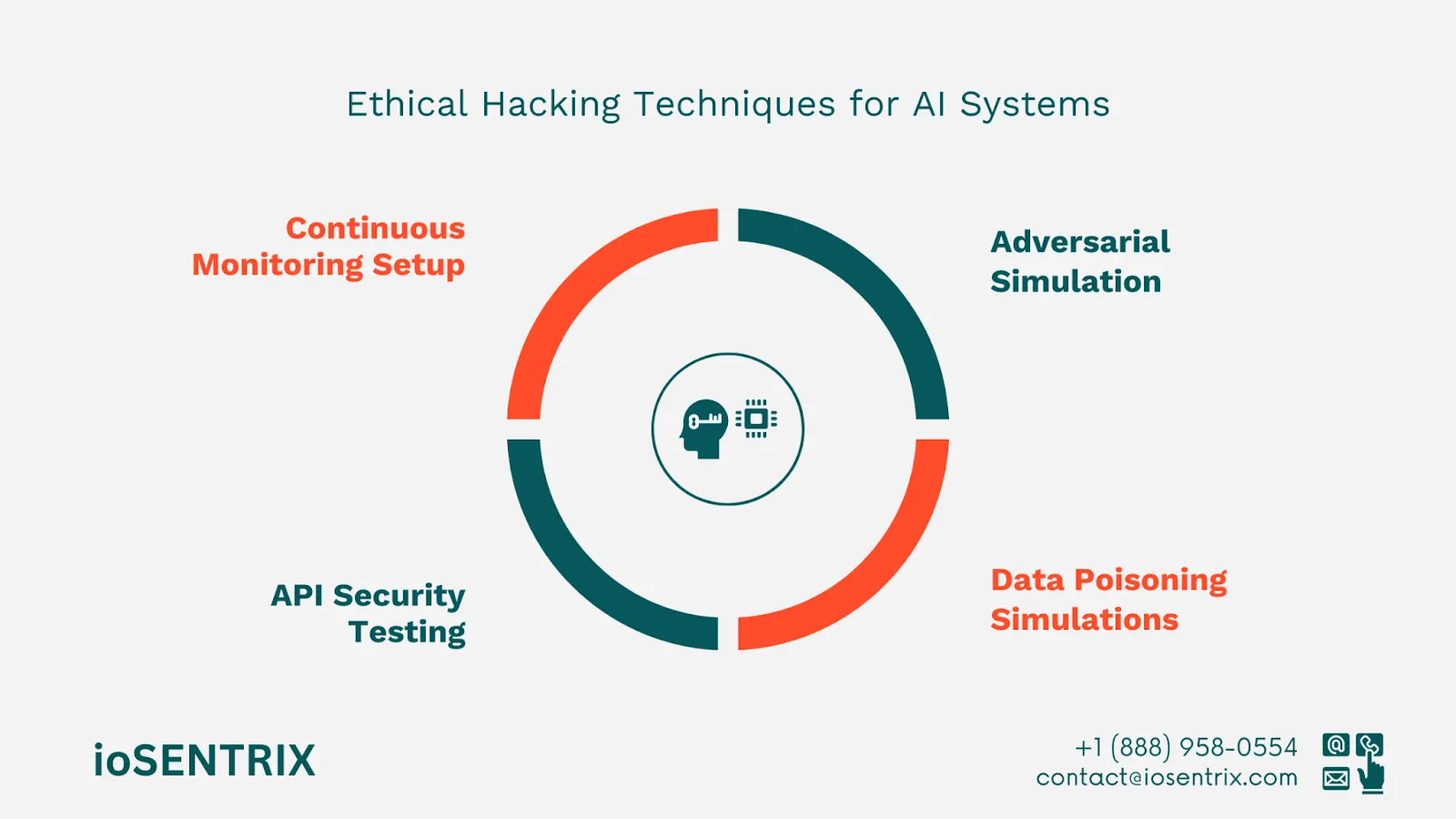

We simulate adversarial attacks to test model resilience under real-world conditions.

Focuses on API vulnerabilities that could allow unauthorized access or model exploitation.

Identifies weak points in data pipelines and their impact on model performance.

Deploys tools for real-time monitoring of AI behavior and anomalies.

An e-commerce giant leveraging AI for personalized shopping.

Ensure the AI system’s resilience against adversarial attacks and data leakage.

Enhanced security posture, preventing potential exploitation and data breaches.

Ethical hacking is critical for uncovering and addressing the unique vulnerabilities of AI and LLM systems. ioSENTRIX’s red teaming services simulate real-world attacks, ensuring your AI systems are secure, resilient, and reliable.

Secure your AI systems with ethical hacking. Contact ioSENTRIX today.